GPT 5.1: Codex-Max and Other Upgrades

We’re talking GPT 5.1, the update that didn’t try to be a revolution, but instead sought to be useful. It’s like a version 5 but with a better sense of timing, mood control, and fewer long-winded detours. It arrived as a response to developer grumbles and user gripes about GPT 5, and OpenAI’s message is simple; same broad intelligence, smarter about when to think hard and when to just answer.

Here’s the idea, PT-5.1 adjusts how much “thinking” it does depending on the task. For quick, everyday queries, it spends fewer tokens, so it’s faster and cheaper. For knotty, multi-step problems, it digs deeper and checks itself more thoroughly. That’s the “adaptive reasoning” trick in a nutshell. OpenAI says this makes the model noticeably snappier on simple tasks without sacrificing the heavy-lifting when you actually need it.

A small example that shows the difference: try running an npm command to list global packages. GPT 5 used to take longer and use more tokens; GPT 5.1 replies almost instantly, and with less fuss. It’s a tiny thing but small things add up when you’re running thousands of calls.

What’s new

- Adaptive reasoning: dials effort up or down depending on complexity.

- Two operational modes in ChatGPT: Instant for quick chatty interactions and Thinking for deeper work. These are matched automatically, and paid users saw the rollout first.

- Personality presets and tone controls: eight built-in styles (Default, Professional, Friendly, Candid, Quirky, Efficient, Nerdy, Cynical) so the bot sounds the way you want it to. Yes, emojis. Yes, warmth.

- Better coding tools: an apply_patch tool for editing code reliably, a shell tool for running shell commands, and a more steerable coding personality so code suggestions feel less… dramatic. OpenAI also partnered with several coding startups for tuning.

- Extended prompt caching (up to 24 hours) to speed follow-up queries and cut costs.

Comparing GPT 5.1 to GPT 5

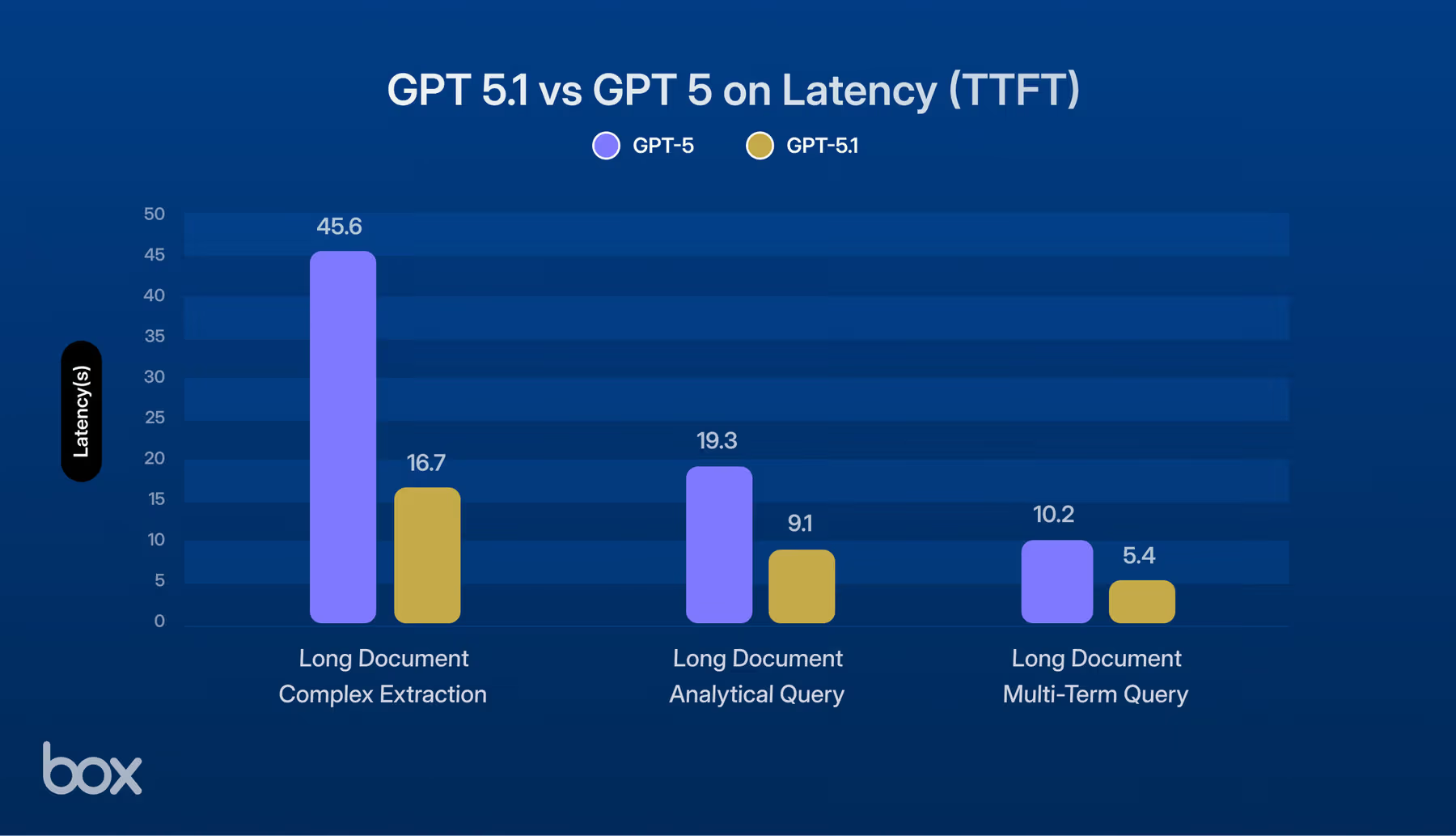

Speed on simple tasks: GPT 5.1 is noticeably faster and uses fewer tokens for trivial queries. OpenAI gives an example where a simple command is answered in ~2 seconds instead of ~10. GPT-5.1’s “Instant” behavior is tuned for that.

Reliability on hard tasks: GPT-5 kept strong reasoning abilities; GPT-5.1 aims to be smarter about when to apply heavy thinking. For sustained, tool-heavy workflows (like multi-step coding), GPT-5.1 typically performs equal or better and, in some coding benchmarks, noticeably better.

Personality and tone: 5.1 broadens conversational presets, everything from “Friendly” to “Cynical” and introduces easier ways to tune tone. It’s a little warmer, a little more customizable, and less clinical than some users found GPT-5.

Codex-Max: the long-haul coder

Codex-Max is the headline feature for anyone who wants AI to work on code for hours, not minutes. It’s built on GPT-5.1’s architecture but tailored for software engineering: it compacts its session history automatically to stay within context limits, can iterate on failing tests, and was shown internally to complete tasks spanning more than 24 hours. That means large refactors, multi-file PRs, and long agent loops are now plausible without the session falling apart. In practical terms: imagine an assistant that keeps chipping away on a refactor overnight and lands waking you up with a green test suite. It’s impressive and also raises questions

Why this matters

If you’re a developer, the cost and latency improvements and Codex-Max open up real workflow wins. Moreover, building a product that requires quick answers or smooth code suggestions, GPT-5.1 is meant to feel better. Faster turnaround on routine tasks means lower latency for users and lower token bills for teams. If you’re a writer or a casual ChatGPT user, the personality controls mean you can make the bot sound warmer or more businesslike without having to rewrite your prompts like a diplomat.

OpenAI highlighted improvements on coding benchmarks and shared partner testimonials (companies like Warp, Augment Code, and Cognition reported better speed and more accurate edits). A financial firm, Balyasny Asset Management, said GPT-5.1 outperformed GPT-5 on their tests while running 2–3x faster, not trivial for large-scale usage.

What people like

A lot of the applause is practical. Faster responses for simple queries. Better, quieter iteration when editing code. Cleaner follow-ups in a conversation. The personality presets also give people a mental shortcut: instead of endlessly fiddling with wording to get a tone you like, you pick a preset and go. For busy teams, those little UX wins feel huge. Some said “GPT-5.1 is the next advancement in the GPT-5 series,” they wrote, pointing to adaptive reasoning and developer tools. Partners chimed in too. Warp said they’re making GPT-5.1 the default, and Balyasny said it “outperformed both GPT-4.1 and GPT-5 in our full dynamic evaluation suite.” Sam Altman also tweeted that “GPT-5.1 is out! It’s a nice upgrade,” noting improvements in instruction-following and adaptive thinking.

Where the cracks are showing

Not everyone is thrilled. The GPT-5 rollout earlier this year already had mixed reviews: some engineers said it underdelivered compared to the hype, and critics pointed to marketing claims and selective benchmarks as overstated. Wired and others noted that while GPT-5 was cost-efficient, it wasn’t uniformly better at code quality than competitors like Anthropic’s models and that disappointed some early adopters. GPT-5.1 is partly an answer to that skepticism, but critics still want independent, reproducible benchmarks.

There’s also an uneasy conversation about “personality.” Giving a bot a more human-sounding tone is useful. It’s also a slippery slope. Some commentary has asked: When does warmth become manipulation? Or more bluntly should a corporation tune an AI to be more likable when that makes it easier to get people to accept wrong answers? Ars Technica called the personality rollout a “tricky tightrope,” noting the balance between making AI pleasant and avoiding user confusion about whether the system is a tool or a being.

And then there’s the evergreen complaint: transparency. OpenAI’s own benchmarks and partner testimonials are informative — but independent evaluations still matter. Academics and engineers want open, reproducible tests that show where the model actually improves and where it still trips up. That demand hasn’t gone away.

The bottom line

GPT-5.1 is iterative. It fixes tone, tightens speed, and gives developers better controls. For many real-world products, those refinements will feel like a big deal. For the hype-hungry, it won’t be the moonshot they imagined.

If you use AI a lot, you’ll notice the difference in day-to-day flow: responses that don’t overthink simple things, and deeper crawling when you actually need a chain of thought. If you care about independent verification, you’ll keep asking for open benchmarks and you’ll want to watch how OpenAI handles critique around transparency and marketing claims.